In the previous articles, we have a tendency to mention many ways that of taking backup and testing the restore method to make sure the integrity of the computer file. You can pay a visit to that in case you missed out something important. Here’s a link

https://www.solutionviews.com/recuperate-sql-server-data-from-unplanned-update-and-delete-process/

https://www.solutionviews.com/how-to-recover-sql-information-from-a-born-table-while-not-backups/

In this article, we’re about to discuss the importance of the information backup reportage. This type of reportage is going to be designed to eliminate any inessential risk that will arise with the security and security of knowledge. this is often the rationale, the high-level report; Daily medical exam report are going to be generated and sent to the SME or to the DBA answerable for IT below management.

Database backup’s area unit a significant element for many of information directors regardless what backup tool is in situ and the way the information backup method is all concerning backing up the information to the disk, to the tape or to the cloud.

In general, directors’ area unit greatly involved with obtaining the report daily and additionally any alerts as per the outlined SLAs. directors believe backup report back to perceive and the way the backups do and invariably wished to safeguard the information

The other space, we’ll cowl, is that the on-line backup; it’s more and more turning into the default selection for several tiny businesses databases. A information backup report is a crucial document that reveals the precise piece of knowledge concerning the standing of the information. In general, the generation reports became a regular apply for any business UN agency values their knowledge.

A backup report, ought to be made, once every backup job has run. The report provides elaborated data as well as what was saved, what will be repaired and knowledge concerning the backup media. a number of the data provided is particular to every backup kind.

The

following area unit the 3 vital parameters that

require to be reviewed at regular intervals of your time are:

- Backup failure jobs.

This is the foremost vital metric to be measured and in most cases, it needs immediate attention and action. For take a look at or dev environments, this might stay up for being taking AN action supported the SLA

- Capacity coming up with and prediction.

The other report usually circulated each day is that the space utilization report. Proactive observance of storage would forestall most of the backup failures and it helps to forecast the information growth. successively this could scale back several unforeseen space connected problems.

- Performance

Backup performance is that the vital metric to live the general health of the system. It provides a wide-awake for several hidden problems like hardware resource downside, driver problems, network turnout downside, code downside etc:-

The importance of backup reports must measured all told the aforesaid parameters. In some cases, we have a tendency to will shrewdness the backup ran the previous night or we want to match the time it took for winning execution of the backup. These area unit a number of the metrics one must contemplate for information backup reports.

Obviously, the backup age, completion standing of a backup job and alerting and periodic notifications area unit thought-about the foremost basic perform of any backup reports ought to offer. In fact, backup tools offer some styles of a daily backup reports. Being said, most of the backup tools do an excellent job of backing up the information and in some cases, the reports aren’t easy which needs the customization.

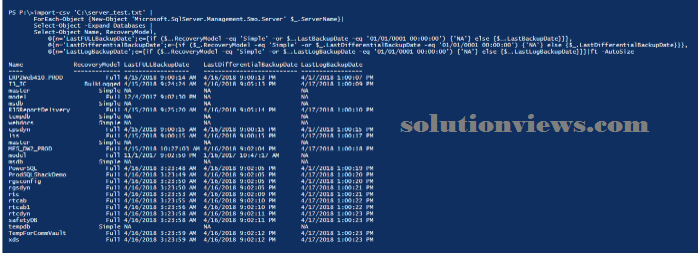

Generating backup reports victimization PowerShell SMO libraries:

You can customize the PowerShell script and/or T-SQL schedule the work as per the need.

Prepare the script:

Install SQL Server PowerShell module from the PowerShell repository hub PS Gallery. The directions within the link detail the direction for putting in SQL Server module

Prepare the servers list in a very CSV file and import the CSV file victimization import-csv cmdlets

Prepare the straightforward PowerShell script by instantiating SMO category libraries. PowerShell permits investment cmdlets and objects through an idea called piping.

In the following example, {we can|we will|we area unit able to} see that however the objects are transmissible its properties from a information.

PowerShell script:

Import-module SQLServer

import-csv ‘C:\server_test.txt’ |

ForEach-Object {New-Object ‘Microsoft.SqlServer.Management.Smo.Server’ $_.ServerName}|

Select-Object -Expand Databases |

Select-Object Name, RecoveryModel,

@{n=’LastFULLBackupDate’;e={if ($_.RecoveryModel -eq ‘Simple’ -or $_.LastBackupDate -eq ’01/01/0001 00:00:00′) {‘NA’} else {$_.LastBackupDate}}},

@{n=’LastDifferentialBackupDate’;e={if ($_.RecoveryModel -eq ‘Simple’ -or $_.LastDifferentialBackupDate -eq ’01/01/0001 00:00:00′) {‘NA’} else {$_.LastDifferentialBackupDate}}},

@{n=’LastLogBackupDate’;e={if ($_.RecoveryModel -eq ‘Simple’ -or $_.LastLogBackupDate -eq ’01/01/0001 00:00:00′) {‘NA’} else {$_.LastLogBackupDate}}}|ft -AutoSize

Let’s dissect the script

- The input data, CSV file, contains the

server names

- The computer file, HTML type, the string

output is reborn to hypertext mark-up language format victimization string

concatenation

- Email list, this

parameter contains the recipient’s email ids. you’ll have one or additional and every id should be separated by a comma

- Use PowerShell cmdlets

to verify and install the SQL Server module from PSGallery

- Define the CSS

(Cascading vogue Sheet)

that contains predefined hypertext

mark-up language designs that area unit about to be referred for hypertext mark-up language formatting. A CSS contains several vogue definitions. for instance, outline table designs, background color, border and

heading and plenty of additional. it’s that straightforward to

make AN hypertext mark-up language document to urge the specified information impact and results.

- Build a PowerShell

cmdlet to assemble a knowledge set supported the conditions.

- The logical condition

is outlined with AN assumption of weekly full,

daily differential and hourly t-log backups.

- The color combination

highlights those databases that need immediate measures to be

taken as per the outlined SLA.

- Define the e-mail notification system

Let’s save the subsequent content Databasbackup.ps1.

#Change value of following variables as needed

$ServerList = “C:\server_test.txt”

$OutputFile = “C:\output.htm”

If (Test-Path $OutputFile){

Remove-Item $OutputFile

}

$emlist=”pjayaram@appvion.com,prashanth@abc.com”

$MailServer=’sqlshackmail.mail.com’

$HTML = ‘<style type=”text/css”>

#Header{font-family:”Trebuchet MS”, Arial, Helvetica, sans-serif;width:100%;border-collapse:collapse;}

#Header td, #Header th {font-size:14px;border:1px solid #98bf21;padding:3px 7px 2px 7px;}

#Header th {font-size:14px;text-align:left;padding-top:5px;padding-bottom:4px;background-color:#A23942;color:#fff;}

#Header tr.alt td {color:#000;background-color:#EAF2D3;}

</Style>’

$HTML += “<HTML><BODY><Table border=1 cellpadding=0 cellspacing=0 width=100% id=Header>

<TR>

<TH><B>ServerName Name</B></TH>

<TH><B>Database Name</B></TH>

<TH><B>RecoveryModel</B></TD>

<TH><B>Last Full Backup Date</B></TH>

<TH><B>Last Differential Backup Date</B></TH>

<TH><B>Last Log Backup Date</B></TH>

</TR>”

try {

If (Get-Module SQLServer -ListAvailable)

{

Write-Verbose “Preferred SQLServer module found”

}

else

{

Install-Module -Name SqlServer

}

} catch {

Write-Host “Check the Module and version”

}

Import-Csv $ServerList |ForEach-Object {

$ServerName=$_.ServerName

$SQLServer = New-Object (‘Microsoft.SqlServer.Management.Smo.Server’) $ServerName

Foreach($Database in $SQLServer.Databases)

{

$DaysSince = ((Get-Date) – $Database.LastBackupDate).Days

$DaysSinceDiff = ((Get-Date) – $Database.LastDifferentialBackupDate).Days

$DaysSinceLog = ((Get-Date) – $Database.LastLogBackupDate).TotalHours

IF(($Database.Name) -ne ‘tempdb’ -and ($Database.Name) -ne ‘model’)

{

if ($Database.RecoveryModel -like “simple” )

{

$HTML += “<TR >

<TD>$($SQLServer)</TD>

<TD>$($Database.Name)</TD>

<TD>$($Database.RecoveryModel)</TD>”

if ($DaysSince -gt 7)

{

$HTML += “<TD bgcolor=’RED’>$($Database.LastBackupDate)</TD>”

}

else

{

$HTML += “<TD>$($Database.LastBackupDate)</TD>”

}

if ($DaysSinceDiff -gt 1)

{

$HTML += “<TD bgcolor=’CYAN’>$($Database.LastDifferentialBackupDate)</TD>”

}

else

{

$HTML += “<TD>$($Database.LastDifferentialBackupDate)</TD>”

}

$HTML += “<TD>NA</TD></TR>”

}

}

if ($Database.RecoveryModel -like “full” )

{

$HTML += “<TR >

<TD>$($SQLServer)</TD>

<TD>$($Database.Name)</TD>

<TD>$($Database.RecoveryModel)</TD>”

if ($DaysSince -gt 7)

{

$HTML += “<TD bgcolor=’RED’>$($Database.LastBackupDate)</TD>”

}

else

{

$HTML += “<TD>$($Database.LastBackupDate)</TD>”

}

if ($DaysSinceDiff -gt 1)

{

$HTML +=”<TD bgcolor=’CYAN’>$($Database.LastDifferentialBackupDate)</TD>”

}

else

{

$HTML += “<TD>$($Database.LastDifferentialBackupDate)</TD>”

}

if($DaysSinceLog -gt 1)

{

$HTML +=”<TD bgcolor=’Yellow’>$($Database.LastLogBackupDate)</TD>”

}

else

{

$HTML += “<TD>$($Database.LastLogBackupDate)</TD>”

}

}

}

}

$HTML += “</Table></BODY></HTML>”

$HTML | Out-File $OutputFile

Function sendEmail

{

param($from,$to,$subject,$smtphost,$htmlFileName)

$body = Get-Content $htmlFileName

$body = New-Object System.Net.Mail.MailMessage $from, “$to”, $subject, $body

$body.isBodyhtml = $true

$smtpServer = $MailServer

$smtp = new-object Net.Mail.SmtpClient($smtpServer)

$smtp.Send($body)

}

$date = ( get-date ).ToString(‘yyyy/MM/dd’)

sendEmail solutionviews@whatever.com $emlist “Database Backup Report – $Date” $MailServer $OutputFile

Output:

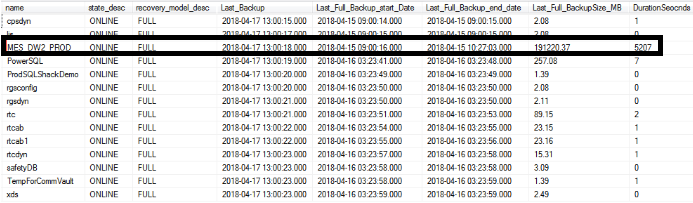

Backup reports victimization T-SQL:

Let’s discuss the report generation victimization T-SQL

This SQL has 3 sections

- Full back up standing

- Differential backup standing

- T-log backup standing

I will discuss the complete backup a part of the script. an equivalent would be applicable to alternative backup sorts moreover. The primary half is all concerning aggregation and transformation to urge all the rows from the msdb.dbo.backupset. The aggregation is finished by taking the foremost recent rows from the system objects for specific backup sorts. Transformation is finished by changing the multi-line rows into columns for the databases. The column additionally includes the backup size and period it took for its completion.

If needed, you’ll refer the link backup strategy on the way to pull the information into a central repository victimization T-SQL and PowerShell.

Prepare SQL:

WITH backupsetSummary

AS ( SELECT bs.database_name ,

bs.type bstype ,

MAX(backup_finish_date) MAXbackup_finish_date

FROM msdb.dbo.backupset bs

GROUP BY bs.database_name ,

bs.type

),

MainBigSet

AS ( SELECT

@@SERVERNAME servername,

db.name ,

db.state_desc ,

db.recovery_model_desc ,

bs.type ,

convert(decimal(10,2),bs.backup_size/1024.00/1024) backup_sizeinMB,

bs.backup_start_date,

bs.backup_finish_date,

physical_device_name,

DATEDIFF(MINUTE, bs.backup_start_date, bs.backup_finish_date) AS DurationMins

FROM master.sys.databases db

LEFT OUTER JOIN backupsetSummary bss ON bss.database_name = db.name

LEFT OUTER JOIN msdb.dbo.backupset bs ON bs.database_name = db.name

AND bss.bstype = bs.type

AND bss.MAXbackup_finish_date = bs.backup_finish_date

JOIN msdb.dbo.backupmediafamily m ON bs.media_set_id = m.media_set_id

where db.database_id>4

)

— select * from MainBigSet

SELECT

servername,

name,

state_desc,

recovery_model_desc,

Last_Backup = MAX(a.backup_finish_date),

Last_Full_Backup_start_Date = MAX(CASE WHEN A.type=’D’

THEN a.backup_start_date ELSE NULL END),

Last_Full_Backup_end_date = MAX(CASE WHEN A.type=’D’

THEN a.backup_finish_date ELSE NULL END),

Last_Full_BackupSize_MB= MAX(CASE WHEN A.type=’D’ THEN backup_sizeinMB ELSE NULL END),

DurationSeocnds = MAX(CASE WHEN A.type=’D’

THEN DATEDIFF(SECOND, a.backup_start_date, a.backup_finish_date) ELSE NULL END),

Last_Full_Backup_path = MAX(CASE WHEN A.type=’D’

THEN a.physical_Device_name ELSE NULL END),

Last_Diff_Backup_start_Date = MAX(CASE WHEN A.type=’I’

THEN a.backup_start_date ELSE NULL END),

Last_Diff_Backup_end_date = MAX(CASE WHEN A.type=’I’

THEN a.backup_finish_date ELSE NULL END),

Last_Diff_BackupSize_MB= MAX(CASE WHEN A.type=’I’ THEN backup_sizeinMB ELSE NULL END),

DurationSeocnds = MAX(CASE WHEN A.type=’I’

THEN DATEDIFF(SECOND, a.backup_start_date, a.backup_finish_date) ELSE NULL END),

Last_Log_Backup_start_Date = MAX(CASE WHEN A.type=’L’

THEN a.backup_start_date ELSE NULL END),

Last_Log_Backup_end_date = MAX(CASE WHEN A.type=’L’

THEN a.backup_finish_date ELSE NULL END),

Last_Log_BackupSize_MB= MAX(CASE WHEN A.type=’L’ THEN backup_sizeinMB ELSE NULL END),

DurationSeocnds = MAX(CASE WHEN A.type=’L’

THEN DATEDIFF(SECOND, a.backup_start_date, a.backup_finish_date) ELSE NULL END),

Last_Log_Backup_path = MAX(CASE WHEN A.type=’L’

THEN a.physical_Device_name ELSE NULL END),

[Days_Since_Last_Backup] = DATEDIFF(d,(max(a.backup_finish_Date)),GETDATE())

FROM

MainBigSet a

group by

servername,

name,

state_desc,

recovery_model_desc

— order by name,backup_start_date desc

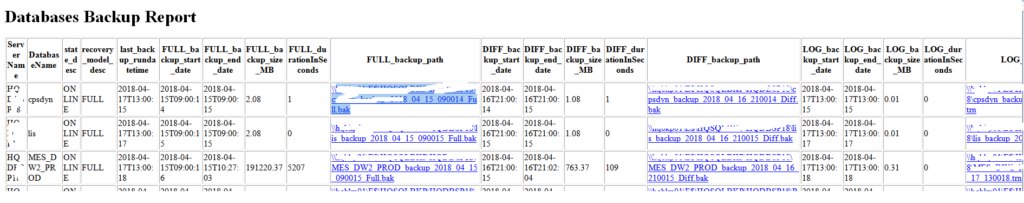

T-SQL output:

Creating a hypertext mark-up language backup report victimization XML:

In this section, we’re about to discuss the generation of the hypertext mark-up language tags victimization FOR XML clause. It provides how to convert the results of AN SQL question to XML. The complicated SQL knowledge is pushed to a temporary worker table named #temp. This facilitates the conversion of AN SQL knowledge into XML in a very a lot of a less complicated manner. you’ll refer the XML link for additional data.

Next, outline the SQL text fields as knowledge sections victimization FOR XML PATH clause and outline the XML schema.

Configure SQL Server Agent Mail to Use information Mail and pass the XML string is hypertext mark-up language kind knowledge to send hypertext mark-up language output to meant recipients

Declare @tableHTML NVARCHAR(MAX) ;

WITH backupsetSummary

AS ( SELECT bs.database_name ,

bs.type bstype ,

MAX(backup_finish_date) MAXbackup_finish_date

FROM msdb.dbo.backupset bs

GROUP BY bs.database_name ,

bs.type

),

MainBigSet

AS ( SELECT

@@SERVERNAME servername,

db.name ,

db.state_desc ,

db.recovery_model_desc ,

bs.type ,

convert(decimal(10,2),bs.backup_size/1024.00/1024) backup_sizeinMB,

bs.backup_start_date,

bs.backup_finish_date,

physical_device_name,

DATEDIFF(MINUTE, bs.backup_start_date, bs.backup_finish_date) AS DurationMins

FROM master.sys.databases db

LEFT OUTER JOIN backupsetSummary bss ON bss.database_name = db.name

LEFT OUTER JOIN msdb.dbo.backupset bs ON bs.database_name = db.name

AND bss.bstype = bs.type

AND bss.MAXbackup_finish_date = bs.backup_finish_date

JOIN msdb.dbo.backupmediafamily m ON bs.media_set_id = m.media_set_id

where db.database_id>4

)

SELECT

servername,

name,

state_desc,

recovery_model_desc,

Last_Backup = MAX(a.backup_finish_date),

Last_Full_Backup_start_Date = MAX(CASE WHEN A.type=’D’

THEN a.backup_start_date ELSE NULL END),

Last_Full_Backup_end_date = MAX(CASE WHEN A.type=’D’

THEN a.backup_finish_date ELSE NULL END),

Last_Full_BackupSize_MB= MAX(CASE WHEN A.type=’D’ THEN backup_sizeinMB ELSE NULL END),

FULLDurationSeocnds = MAX(CASE WHEN A.type=’D’

THEN DATEDIFF(SECOND, a.backup_start_date, a.backup_finish_date) ELSE NULL END),

Last_Full_Backup_path = MAX(CASE WHEN A.type=’D’

THEN a.physical_Device_name ELSE NULL END),

Last_Diff_Backup_start_Date = MAX(CASE WHEN A.type=’I’

THEN a.backup_start_date ELSE NULL END),

Last_Diff_Backup_end_date = MAX(CASE WHEN A.type=’I’

THEN a.backup_finish_date ELSE NULL END),

Last_Diff_BackupSize_MB= MAX(CASE WHEN A.type=’I’ THEN backup_sizeinMB ELSE NULL END),

DIFFDurationSeocnds = MAX(CASE WHEN A.type=’I’

THEN DATEDIFF(SECOND, a.backup_start_date, a.backup_finish_date) ELSE NULL END),

Last_Diff_Backup_path = MAX(CASE WHEN A.type=’I’

THEN a.physical_Device_name ELSE NULL END),

Last_Log_Backup_start_Date = MAX(CASE WHEN A.type=’L’

THEN a.backup_start_date ELSE NULL END),

Last_Log_Backup_end_date = MAX(CASE WHEN A.type=’L’

THEN a.backup_finish_date ELSE NULL END),

Last_Log_BackupSize_MB= MAX(CASE WHEN A.type=’L’ THEN backup_sizeinMB ELSE NULL END),

LOGDurationSeocnds = MAX(CASE WHEN A.type=’L’

THEN DATEDIFF(SECOND, a.backup_start_date, a.backup_finish_date) ELSE NULL END),

Last_Log_Backup_path = MAX(CASE WHEN A.type=’L’

THEN a.physical_Device_name ELSE NULL END),

[Days_Since_Last_Backup] = DATEDIFF(d,(max(a.backup_finish_Date)),GETDATE())

into #temp

FROM

MainBigSet a

group by

servername,

name,

state_desc,

recovery_model_desc

— order by name,backup_start_date desc

SET @tableHTML =

N'<H1>Databases Backup Report</H1>’ +

N'<table border=”1″>’ +

N'<tr>

<th>Server Name</th>

<th>DatabaseName</th>

<th>state_desc</th>

<th>recovery_model_desc</th>

<th>last_backup_rundatetime</th>

<th>FULL_backup_start_date</th>

<th>FULL_backup_end_date</th>

<th>FULL_backup_size_MB</th>

<th>FULL_durationInSeconds</th>

<th>FULL_backup_path</th>

<th>DIFF_backup_start_date</th>

<th>DIFF_backup_end_date</th>

<th>DIFF_backup_size_MB</th>

<th>DIFF_durationInSeconds</th>

<th>DIFF_backup_path</th>

<th>LOG_backup_start_date</th>

<th>LOG_backup_end_date</th>

<th>LOG_backup_size_MB</th>

<th>LOG_durationInSeconds</th>

<th>LOG_backup_path</th>

<th>DaysSinceLastBackup</th>

</tr>’ +

CAST ( (

SELECT

td=servername,’ ‘,

td=name,’ ‘,

td=state_desc,’ ‘,

td=recovery_model_desc,’ ‘,

td=Last_Backup,’ ‘,

td=Last_Full_Backup_start_Date,’ ‘,

td=Last_Full_Backup_end_date, ‘ ‘,

td=Last_Full_BackupSize_MB, ‘ ‘,

td=FULLDurationSeocnds, ‘ ‘,

td=Last_Full_Backup_path, ‘ ‘,

td=Last_Diff_Backup_start_Date, ‘ ‘,

td= Last_Diff_Backup_end_date, ‘ ‘,

td= Last_Diff_BackupSize_MB,’ ‘,

td=DiffDurationSeocnds,’ ‘,

td=Last_Diff_Backup_path, ‘ ‘,

td=Last_Log_Backup_start_Date,’ ‘,

td=Last_Log_Backup_end_date,’ ‘,

td=Last_Log_BackupSize_MB,’ ‘,

td=LogDurationSeocnds,’ ‘,

td=Last_Log_Backup_path,’ ‘,

td=Days_Since_Last_Backup, ‘ ‘

FROM

#temp a

FOR XML PATH(‘tr’), TYPE

) AS NVARCHAR(MAX) ) +

N'</table>’ ;

— order by name,backup_start_date desc

EXEC msdb.dbo.sp_send_dbmail

@profile_name=’PowerSQL’,

@recipients=’pjayaram@appvion.com’,

@subject = ‘Database Backup’,

@body = @tableHTML,

@body_format = ‘HTML’ ;

drop table #temp

That’s all for now…

Wrapping up:

So far we’ve seen the way to manage the handle the backup reports. we have a tendency to additionally raised the importance of reports and its implications on the prevailing system. {we can |we will we area unit able to} originated observance that permits directors to require immediate corrective action instead of searching for later once reports are scan. We are able to additionally store the history in a very centralized repository and it’ll facilitate to spot the backup performance over time.

Growth trends and reports offer the flexibility to forecast the specified storage and build capability selections before they become problems.

Before choosing a reportage tool, it’s a decent apply to ascertain a whole list of reportage desires and additionally, some “nice to have” options.